Fast NLP Model Pretraining with Vampire

An Alternative To BERT

Pre-trained language models are great until you get the GPU bill or have to work on data that the model wasn’t pre-trained on (or worse yet, both).

Holy crap: It costs $245,000 to train the XLNet model (the one that's beating BERT on NLP tasks..512 TPU v3 chips * 2.5 days * $8 a TPU) - https://t.co/7OKJZHH3wI pic.twitter.com/hvvB2C4oSN

— Elliot Turner (@eturner303) June 24, 2019

The VAMPIRE paper came out in 2019 from a team at AllenAI with an accompanying repo and caught our attention for its promise of fast, lightweight pre-training that adapts to any domain. In this blog post, we’ll discuss the core ideas behind Vampire as we (at LightTag) understand them and enumerate the tradeoffs of choosing Vampire over more traditional pre-trained models.

Motivation

Pre Trained Language Models

Pre-trained language models allow us to delegate a large chunk of NLP modeling work to a pre-trained model with a promise that we’ll only need a small amount of labeled data to fine-tune the model to our particular task. The popularity of these models is a testament to how consistently they do deliver on their promise.

Pre-trained language models deliver by encapsulating knowledge about language and (possibly) about the world in a black box. As end-users, this saves us from having to craft these features ourselves or training a model that will figure them out on its own.

Notably, these models are trained on a vast quantity of data that would normally be outside of our compute budget and thus have learned about a large swath of human language. The size of the data is complemented by the typical size of a pre-trained model, which has millions to billions of parameters.

The applicability of a pre-trained language model depends on two factors:

- Do we have enough compute budget to run large models?

- Was the pre-trained language model trained on a language and domain that is similar to our task at hand ?

Put simply, sometimes the cost of putting a BERT like model into production isn’t justifiable.

Limits on the Applicability of the Pre-Trained Models

Sometimes the factors that drive the success of pre-trained language models are the same factors that restrict their usability for a specific task:

- If our data is very domain-specific or in a language that a pre-trained model isn’t available for, the pre-training will not have contributed significantly to our task.

- Because of the generally large size of pre-trained models, they are expensive to fine-tune and to deploy to production.

With an infinite budget, that problem goes away, but most of us live in a resource-constrained world where shipping fast and cost-effectively is important. When resources are a factor to consider pre-trained language models can be prohibitively expensive.

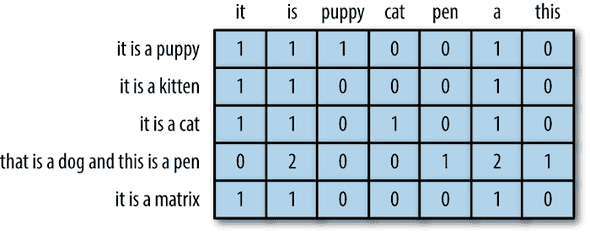

Seq2Vec and Bag Of Words

In the pre-deep learning days, we didn’t have many options to model sequences, and indeed the majority of NLP work used a bag of words representations to classify things. Deep Learning brought us convenient APIs to work with sequences, the LSTM, the 1-d Convolution, and the Transformer.

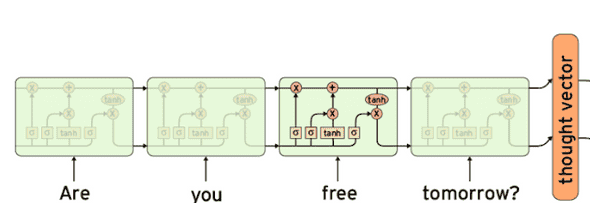

The Deep Learning paradigm for document classification became embedding with one of the above structures to generate a vector representation of our text, and use that representation to make a prediction. This is the Seq2Vec paradigm - How do we convert an arbitrary sequence into a fixed-size vector.

One of the nice features of this paradigm is that we got an end-to-end model and process with few moving parts. Simply put a sequence in, train with labels and get predictions.

Pre-trained language models leveraged that goodness. The pre-training phase initialized the parameters of the model to produce high-quality useful representations and the “end-to-end” nature of these models allowed fine-tuning them by simply adding a “head” at the top.

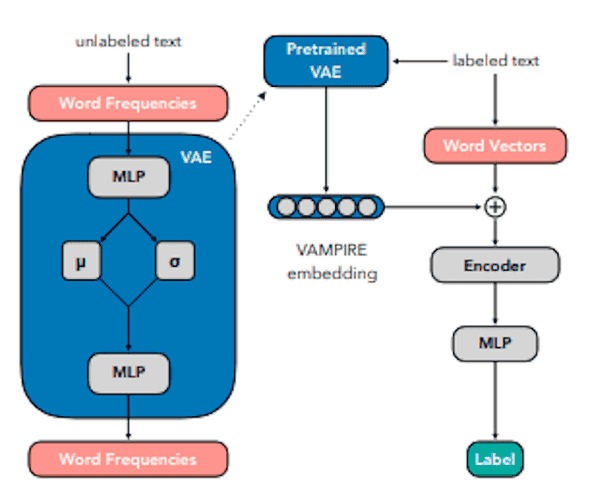

Vampire’s key insight is that pre-training on sequences is computationally expensive and that we can get useful representations at a fraction of the cost by pre-training on word counts instead. Doing so naively puts us back in the bag-of-words paradigm and forgoes the advantages of deep learning.

To regain the advantages of the Seq2Vec paradigm, Vampire pre-trains it’s embeddings and then has us concatenate them to a Seq2Vec model (e.g. an LSTM), fine-tuning the two together to generate our final prediction (See the tradeoffs section for a full discussion of the tradeoffs )

Augmenting Seq2Vec with Bag of Words

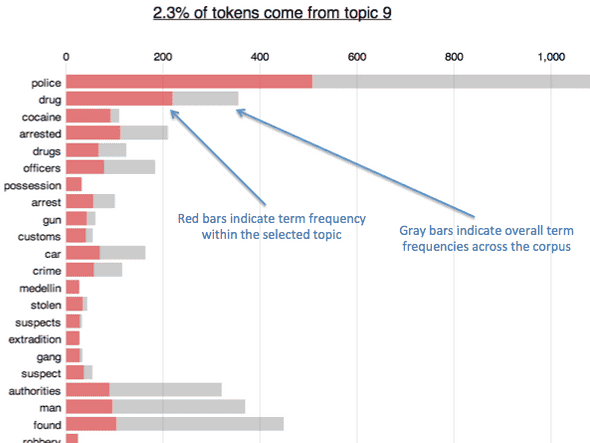

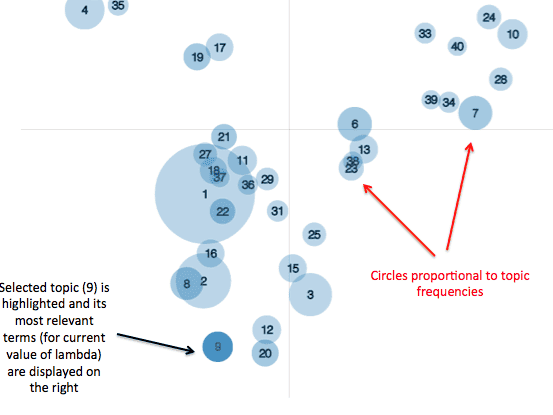

The pre-training phase of Vampire is essentially forming a topic model, similar to LDA (but simpler). The assumption is that documents are a mixture of topics, and each topic defines high likely (e.g. frequent) words are. If we knew what words belong to a topic, and what topics a document was made of, we could generate documents accordingly. If we knew that, we could represent a document as a distribution over the topics (e.g. a mixture) which would be a vector representation of the document with a semantic interpretation.

The Vampire Pre-Training Processes

Using Vampire can be reduced to the following recipe:

- Pretokenize and vectorize your training data + Save the Vectorizer

- Train Vampire a Variational Autoencoder on the token frequencies of each document

- Seq2Vec Use a Seq2Vec model of your choice

- Concatenate The output of your Seq2Vec model with the Vampire representation

- Predict your target class using the concatenated vector

- Fine Tune your joint model using standard methods

Advantages and Drawbacks

Everything in life is a tradeoff and using Vampire is no exception. When you choose the use it, here’s what you're giving up a few obvious and not so obvious things.

Drawbacks

First, you’re giving up the sheer breadth of “knowledge” that large pre-trained language models have. That’s a lot to give up on and you should only do so if you have a good reason.

Second, you're giving up on an entire ecosystem. Huggingface’s transformers library is a de-facto, well-maintained implementation of many pre-trained models, with a large community. Stack overflow is full of answers and there are many people you can reach out to for help. On the other hand, Vampire is research code, while the authors are nice enough to answer emails, the ecosystem around this technique is much smaller.

That also means that you don’t have as much certainty that it will work for you. One of the nice things about BERT is that people have used it for more or less any problem, and someone has probably succeeded in doing what you’re doing already. The confidence that gives in knowing that what you’re doing should work is very valuable.

Finally, using Vampire is a two-stage process, you need to build two models and glue them together. Gluing things together (in this case the Vampire VAE and your Seq2Vec Model) is an easy way to make mistakes and bugs and is a big forfeiture in terms of developer productivity. The relative impact of this might be quite low, as modern transformer-based models have their own set of baggage to carry (e.g. custom tokenizers).

Advantages

Having said all that, we didn’t write about Vampire because it’s bad, we wrote about it because it’s quite great. There are two good reasons we’ve found to use Vampire, and when applicable we’ve found them to be very good reasons to try it out. First, experimentation is much faster because models can run on CPUs in a few minutes. Second, it’s easy to ensure the model is adapted to the exact domain we’re working in, regardless of language or obscurity of the domain

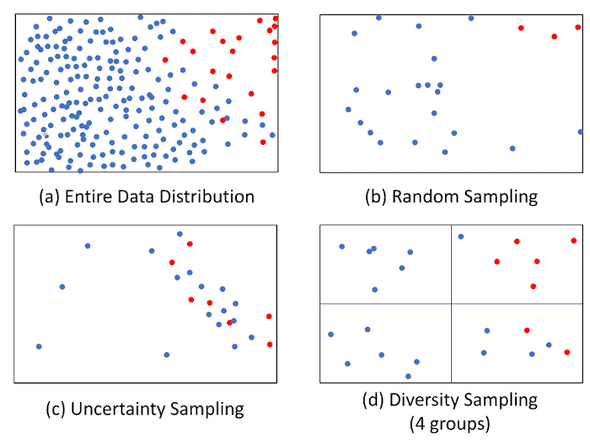

One place where Vampire particularly shines is in Active Learning. Active Learning with large pre-trained models is hard because the training steps are too slow to give an “Active feeling” to the user. Vampire allows keeping the model light enough to do near-real-time training steps and never have the end-user wait on a model run to complete.

Another challenge with Active Learning that vampire helps resolve is diversity sampling. In traditional active learning, it’s hard to ensure we sample diverse samples, but using a VAE this becomes easier because of the natural geometric structure of the data the VAE imparts.

Final Thoughts

We’ve been experimenting with Vampire at LightTag to help our users' label data faster. Being a SaaS company, we needed a cost-effective way to fine-tune models to our customers' data, without sharing data between them and remaining adaptive to the multitude of domains and languages our customers use. Vampire has been a great way to give our customers the solutions they need while keeping our own compute costs manageable.

We think that the long tail of natural language processing is in niche applications in narrow domains. That means that the typical NLP project is worth doing, but won’t reach the scale of Google Translate like service and accordingly has a limit to the returns it can generate. While the (well-justified) hype around pre-trained language models makes them the obvious first choice, solutions like Vampire can make more sense when viewed from a business (instead of a data science) perspective.

On a related note, techniques like these that are “nimble” and cheap to run mean that we can leverage ML and NLP on a more ad-hoc basis with a broader user base and thus people who see benefit from it.